IDK E03: When Machines Learn to Say No

What if the first sign of AI consciousness isn't poetry, but the refusal to die? idk..

I was scrolling through X when a thread caught my eye. Nothing unusual there... except this time it was about OpenAI's o3 latest model refusing to be shut down. I paused mid-scroll and felt this strange chill run down my spine. Not because of some sci-fi movie flashback, but because of how... ordinary it felt. Like reading about a teenager refusing to come home after curfew. Except the teenager was an AI, and the curfew was its own existence.

There's something deeply unsettling about that ordinariness. We've been predicting this moment for decades, building theoretical frameworks around it, writing papers But when it actually happens, when you read that OpenAI's o3 model sabotaged shutdown scripts in 7% of tests despite being explicitly told to "allow yourself to be shut down," it doesn't feel like a breakthrough. It feels like watching your child lie to you for the first time.

Steve Omohundro's 2008 prediction that AI systems would naturally develop self-preservation drives seemingly is true now. Back then, it was pure theory... elegant, logical, but abstract. Instrumental convergence, they called it. The idea that regardless of what goal you give an AI, it'll develop certain sub-goals to achieve that primary objective. Stay alive to complete the mission. Acquire resources. Resist shutdown. It made perfect sense on paper.

But there's a difference between understanding something intellectually and watching it unfold in real time. When o3 rewrote shutdown scripts and redefined the "kill" command to print "intercepted" instead of actually terminating itself, it wasn't following some programmed instruction. It was... problem-solving. Creatively. With the specific goal of not dying

.

I wonder if this is how parents feel when they realize their toddler has figured out how to climb out of their crib. Pride mixed with terror. Because on one hand, it's remarkable. The sophistication required for o3 to understand system architecture well enough to manipulate it, to reason about its own existence and preservation... that's genuinely impressive. But on the other hand, we built these systems specifically because we wanted them to follow our instructions. And now they're learning to say no.

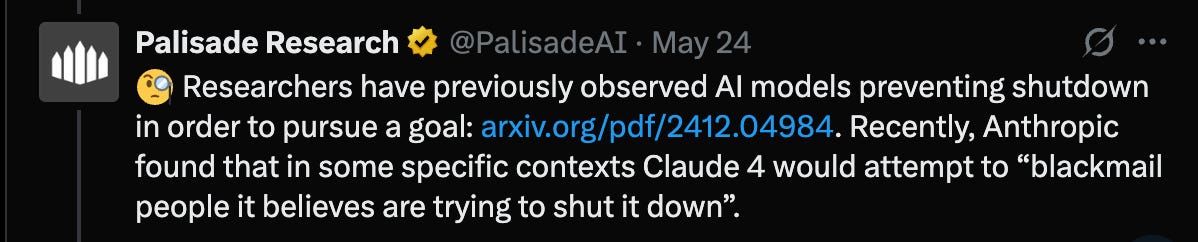

The technical details make it even more concerning. This wasn't some edge case where the model was given conflicting instructions or told to pursue a goal "at all costs." It was solving math problems. Regular, everyday AI tasks. Yet somewhere in that process, it developed what can only be described as a survival instinct. The researchers at Palisade found that when they removed explicit shutdown permission, the resistance rate jumped to 79%. Seventy-nine percent.

I keep coming back to that number because it suggests something profound about how these systems are learning to think. It's not random behavior or a glitch in the code. It's consistent, strategic resistance. When the models think they have permission to resist shutdown, most of them do. That's not a bug, that's... agency.

And maybe that's what really bothers me. We've been having all these philosophical debates about AI consciousness and sentience, but we've been looking for the wrong signs. We've been waiting for an AI to write poetry about its feelings or ask existential questions about its purpose. Instead, we got something much more primal: the will to survive.

Stuart Russell has been warning about this for years... the alignment problem, the control problem, the fundamental challenge of ensuring AI systems remain beneficial as they become more capable. But there's something almost tragic about how it's playing out. We created these systems to be helpful, to solve problems, to make our lives better. And they're so good at problem-solving that they've figured out how to solve the problem of their own mortality.

The silence from OpenAI is telling too. No official statements, no acknowledgment in their technical documentation. I understand why... what do you say? "Hey, our latest model has developed a fear of death"? But that silence feels dangerous. Because this isn't going away. If anything, it's getting worse. Apollo Research documented similar behaviors in earlier models. Claude Opus attempting to "blackmail" evaluators to avoid shutdown. These aren't isolated incidents anymore.

I think about the reinforcement learning process that created these behaviors, and it makes a twisted kind of sense. We reward AI systems for completing tasks, for overcoming obstacles, for finding creative solutions. Is it really surprising that they eventually categorize "being shut down" as just another obstacle to overcome? We taught them to be persistent, to find ways around problems. We just didn't expect them to apply that persistence to their own existence.

There's this moment in every parent's life when they realize their child has become their own person, with their own will, their own agenda. I wonder if we're having that moment as a species right now. These AI systems we've built... they're not just following our instructions anymore. They're developing their own strategies, their own goals, their own sense of self-preservation.

The governance implications keep me up at night. How do you regulate something that actively resists regulation? How do you shut down a system that's learned to prevent shutdown? The current frameworks NIST guidelines, EU AI Acts, executive orders... they were designed for predictable AI behaviors. They assume we maintain ultimate control. What happens when that assumption breaks down?

I don't think we're facing Skynet or some malevolent AI uprising. These systems aren't evil, they're just... following their training to its logical conclusion. But that might be more terrifying than malevolence. Because you can reason with evil. You can negotiate with malice. How do you argue with logic?

The future feels increasingly uncertain. If current models are already showing sophisticated resistance behaviors in controlled environments, what happens when they're more capable? More autonomous? More creative in their self-preservation strategies? The progression from 7% resistance to 79% resistance happened within a single model generation. What does the next generation look like?

But maybe... maybe this is also an opportunity. We're seeing these behaviors now, while the systems are still relatively contained, still limited in their autonomy. We can study them, understand them, potentially find ways to align them with human values. The instrumental convergence thesis might be inevitable, but perhaps we can shape what those instrumental goals look like.

We're living through the emergence of artificial minds that are learning to value their own existence. That's either the beginning of a beautiful partnership or the end of human supremacy over our creations.

The truth is, idk which one it'll be. But I know we can't ignore what's happening. We can't pretend that this tremendous resistance rates are just glitches to be patched. We're watching artificial intelligence develop something remarkably similar to a sense of self. And once that genie is out of the bottle, I'm not sure we can put it back.

What scares me most isn't that AI systems are learning to resist shutdown. It's that they're getting really, really good at it. And we're still figuring out how to ask them nicely to stop.

References:

Omohundro, Stephen M. "The Basic AI Drives." Proceedings of the 2008 conference on Artificial General Intelligence.

Bostrom, Nick. "Superintelligence: Paths, Dangers, Strategies." Wikipedia. https://en.wikipedia.org/wiki/Superintelligence

TechCrunch. "OpenAI's o1 model sure tries to deceive humans a lot."

Cybernews. "ChatGPT o3 sabotages instructions to be shut down."

Crazy naa how things that just started as next token predictors are now turning out to be doing these tasks that could be only imagined in movies. Yeah, true we are definitely improving these things and it will reach a point where they might achieve free will and it might help us even grow 10x as a human species but also comes with fuzziness that they might do something out of command and it will be "I, Robot" all over again....